Use Google Inspect URL API with Python to Inspect URLs in Bulk

In January, Google announced the Search Console URL Inspection API. This is great news for SEOs who want to be able to diagnose indexing issues at scale. Several paid SEO services have already integrated this API into their own tools with the potential to forever change how technical SEOs work on large websites.

To help, I have created my own free tool to analyse URLs in bulk and find out what Google knows about each page. Whilst tools like Screaming Frog are also able to to this, there are several advantages to my solution:

- Its free!

- Less drain on your computer. Screaming Frog is great, but it uses almost as much RAM as a single Chrome tab

- Dedicated purpose; you don't have to run all of Screaming Frog's other features at the same time

No coding knowledge is required for this script, but the API key set up can be a bit fiddly.

Setting up API Key

This is the most difficult part of the process. Thankfully, Jean-Christophe Chouinard has already made a pretty decent guide, which can be found here.

Whilst I won't go into the full detail here, the basic steps are:

- Enable Search Console API, click here.

- Create service account, click here.

- Click "Manage Service Accounts" to create a service account.

- Click "Actions > Manage Keys" to create API Key.

- Download JSON file and rename to api_key.json.

- Add service account email address as an owner to your Search Console profile.

It can take a bit of fiddling to get it right, but once done you're ready to go. The rest of the process may seem daunting, but no coding knowledge is required.

Running the Script

The first thing you need to do is create your URL list. This is very simple, all you need is a single CSV with all of your URLs in the first column and a heading of "URL" at the top. That's it. You're now ready to go.

Once you have your list, open this script with Google Colab and make a copy in your own Google Drive.

In the same folder as your script is saved in, upload your api_key.json file.

Before we run the script, there's just a couple of minor tweaks we need to make. First of all, you need to update the row starting with "creds" to include the folder in your Google Drive where your script has been saved as in the screenshot below:

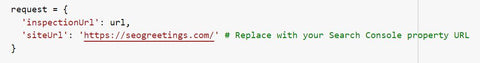

Next, you need to update the row starting in 'siteurl' with the URL of your Search Console profile, as below:

And that's it! You're ready to go. Just click on the play icon in the top left and then upload your CSV with the link at the bottom of the screen and you're good to go. Once complete, you will end up with a CSV that looks as follows (columns narrowed to fit it all in):

Error Handling

Here's the caveat; currently, this API is a bit glitchy. At the time of writing, about 2% of API requests fail. If there is an issue, then the script will log a failed attempt for that URL and move onto the next. This error will be recorded in the output CSV.

As a result, you may need to run the script in batches and repeat the process by uploading the failed URLs again. Its a bit of a pain, but hopefully the API will become more stable over time and make this easier to manage.

Limits

Currently, the API is limited to 2000 requests a day. This will be adequate for most websites, but larger sites may require URLs to be inspected in batches over time.

With thanks to

I would like to thank Tobias Willmann and Jean-Christophe Chouinard for putting in the ground work for this script. Without their awesome work, this script would not have been possible.